Frontier Model Forum +

Frontier Design

AI Safety + Security Services Menu

Alexa Courtney | Founder and CEO | alexa@fdg-llc.com | 571-275-3259

Steve Sheamer | Chief Operating Officer | steve@fdg-llc.com | 202-549-3320

Christopher Cook | Senior Director of AI | christopher@fdg-llc.com | 217-899-5643

Olivia Shoemaker | Lead Advisor for AI | olivia@fdg-llc.com | 952-999-0966

Overview

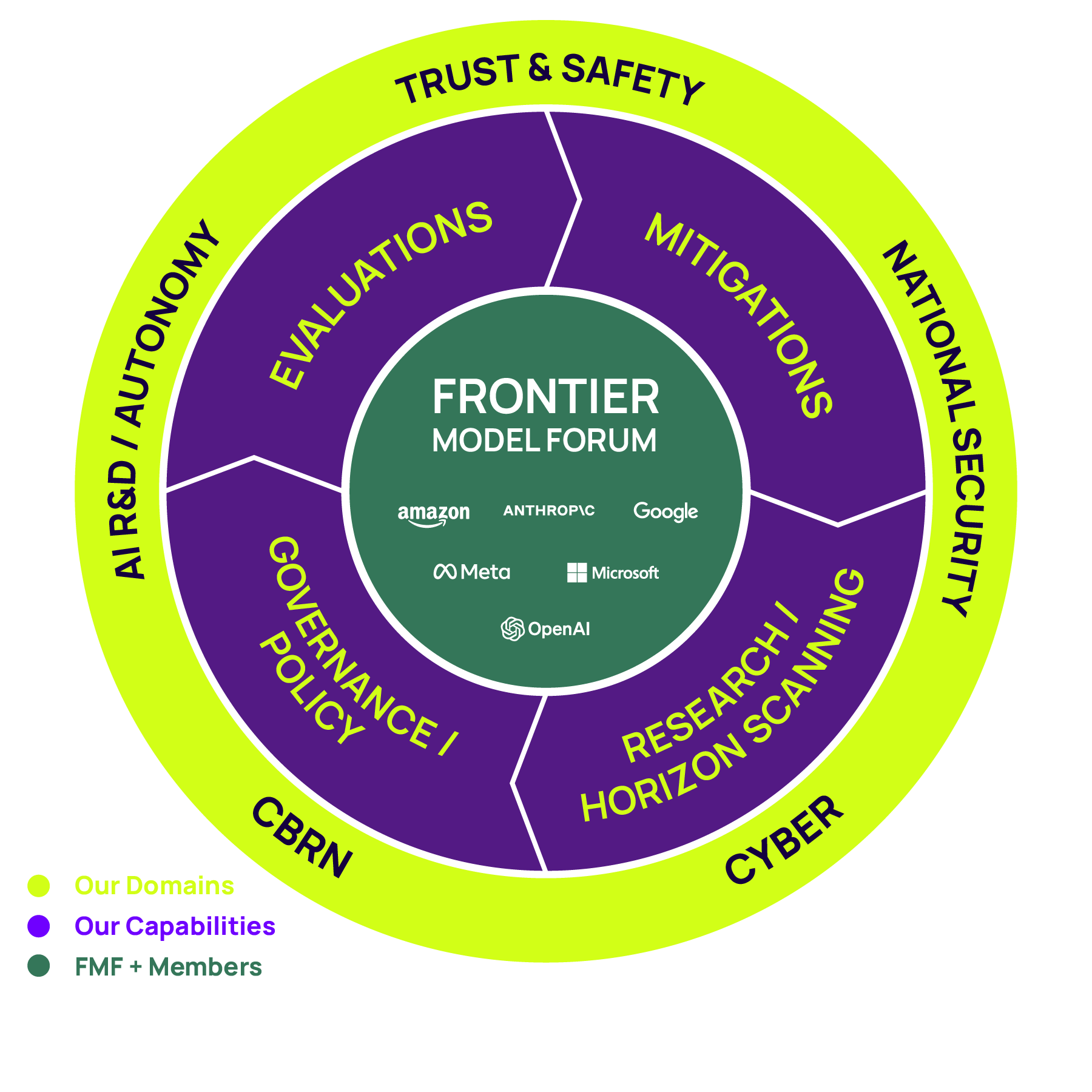

The Frontier Model Forum (FMF) is advancing standards for understanding, evaluating, and mitigating the most significant risks associated with capable AI models. Frontier's AI Safety & Security practice specializes in the topics that FMF cares about, including:

Real-World Risks - Our experts include law enforcement, intelligence analysts, warfighters, and policy leaders with decades of front-line experience addressing real-world risks. Our operational experience grounds our work in realism and direct expertise.

Best Practices - We develop and promote shared safety and security standards, evaluation protocols, and operational guidance that align with FMF’s mandate to identify best practices and support standard development for safe, responsible frontier AI deployment.

Advancing Science - Our team contributes to scientific research and methodological innovation in frontier AI safety, risk frameworks, and independent evaluations, supporting FMF’s goal of advancing the science of frontier AI safety and enabling robust, evidence-based risk mitigation.

Frontier was founded in 2015 to support organizations who are tackling the world’s most pressing national security challenges. Our founder was in Manhattan on 9/11 and has spent her career focused on countering violent extremism and civilian harm. In 2024, we recognized the potential national security risks posed by increasingly advanced AI models and began working with some of the top Frontier labs to address these risks. Through our decades of work in national security, counterterrorism, and violent extremism, we have established a network of 1,000+ world-class subject matter experts (SMEs) across CBRN risk mitigation, counterterrorism, law enforcement, and national security. Our networks include the world’s leading experts in chemical and biological weapons.

Our work is routinely used to inform AI risk decisions for leading AI labs, NIST CAISI, and the AI Safety Fund. As of December 2025, Frontier is partnering with FMF as a client to develop a biorisk safeguards evaluation that can be distributed to FMF members and beyond to reduce biorisk associated with their large language models (LLMs).

Due to our Non-Disclosure Agreements (NDAs) and honoring our client commitments, we are limited in our ability to discuss some of our exciting work. In this document, we seek to demonstrate that we have the experience, expertise, and ability to operate with the speed and efficiency required to execute at the pace of relevancy for FMF and your members.

Please note: This document contains Frontier’s confidential and proprietary information shared with the Frontier Model Forum and its members for the exclusive purposes of evaluating Frontier as a commercial partner. No confidential or proprietary information from any of our clients is disclosed.

Frontier’s Differentiators

Frontier works with FMF labs to integrate real‑world operational reality directly into model evaluation, mitigation, and data curation — at frontier speed.

Abstract Risks

Real-World Risks: Our approach prioritizes authentic real-world scenarios with direct applications. We ensure understanding of technical knowledge by engaging subject matter experts from operationally-relevant backgrounds, including professionals with hands-on experience who previously served agencies such as FBI, DHS, CIA and Special Operations Command.

One-Off Studies

AI Safety Lifecycle Approach: We specialize in turn-key services that capture the entire lifecycle needed for safe and secure models – from the initial study / research design, to execution, through provisioning the software engineering and development necessary to implement evaluations and mitigations. We work with our clients to build bespoke products that nest within their existing governance and policy frameworks and advance the state of their risk science.

Black-Box Solutions ->

Collaboration: We support clients operating under a variety of models and are particularly effective in creative, iterative environments. We value close collaboration and are happy to involve your team as extensively as it aligns with your needs and preferences.

English-Centric Analysis ->

380+ Languages: Threats are omnilingual. We work in over 380 languages to more accurately model global risks and red team all of the possibilities associated with threat actors in English, Mandarin, Russian, Arabic, and beyond. We work in languages with 1 billion + speakers, as well as rare and endangered languages with as few as 50 speakers.

Speed of Academia

Speed of Model Release: Frontier’s streamlined methodologies deliver superior results efficiently and cost effectively. We continuously deliver high-quality results on increasingly short timeframes to keep pace with rapid advances in AI (e.g, designing and delivering rigorous uplift studies with 500+ participants in 2-4 weeks). We have invested heavily in our processes and automation to meet the increasingly demanding timelines of our clients. This speed to execution and cost efficiency is evidenced by our AI clients expanding Frontier’s project scopes from initial proof of concept to large scale projects completed rapidly.

Conventional Wisdom

Out-Of-The-Box Thinking: We take pride in being able to capture novel and emerging dynamics so that every client can walk out of the room hearing something that they haven’t thought about before. We often pair technical experts with ‘creative partners’ (e.g, imagineers from Disney or science fiction authors) from our bench of expert red-teamers to ensure that we aren’t missing possibilities.

Small # of Experts ->

1,000+ Subject Matter Experts: We have worked with over 1,000 subject matter experts in domains like CBRN, cyber, AI autonomy, disinformation, etc, giving us the opportunity to offer bespoke expertise (e.g., pairing virologists with machine learning engineers, pairing terrorism analysts with cybersecurity experts and science fiction writers, etc).

Overview of Core Capabilities

What Makes Frontier Unique:

Real World Operational Expertise

Full AI Safety Lifecycle

380+ Languages

1,000+ SMEs

Speed

Frontier works across domains in public safety and national security to address the complex and multi-disciplinary potential risks posed by AI. Our methodological expertise allows us to choose from a diverse suite of tools, ranging from automated benchmarks, to red teams, and uplift studies, so we can apply the right test to the research question.

In particular, we focus on the following domains:

Chemical, Biological, Radiological, and Nuclear (CBRN)

Cyber

Autonomy / AI Research & Development

Trust & Safety (e.g., Disinformation, Election Security, Child Sexual Abuse Material)

Here, we provide an overview of our core services that we use across those domains.

Evaluations

Types

Automated Benchmarks

Red Teams

Uplift Studies

Examples

Automated Biorisk Benchmark

AI Autonomy Red Team

Cyber Uplift Study

Evaluations

Types

Automated Benchmarks

Red Teams

Uplift Studies

Examples

Automated Biorisk Benchmark

AI Autonomy Red Team

Cyber Uplift Study

Mitigations

Types

Training Data for Classifiers / SFT

Safeguard Evaluations

Do Not Train Libraries

Examples

CBRN Training Dataset

Biorisk Safeguard Evaluation

CBRN Data Filtration

Mitigations

Types

Training Data for Classifiers / SFT

Safeguard Evaluations

Do Not Train Libraries

Examples

CBRN Training Dataset

Biorisk Safeguard Evaluation

CBRN Data Filtration

Domain Specialties

Chemical, Biological, Radiological, and Nuclear (CBRN)

Frontier specializes in chemical, biological, radiological, and nuclear (CBRN) risks and their intersection with artificial intelligence in ways that could dramatically amplify catastrophic potential. All of the Frontier Model Forum’s members currently evaluate biological risk, and some also evaluate chemical, radiological, and nuclear risk.

Uniquely, Frontier has world-class subject matter expertise in chemistry, radiology, and nuclear threats, as well as AI design and software engineering, enabling us to provide unique insight for evaluations, mitigations, and other research across the full CBRN spectrum, rather than solely focusing on biology. Frontier is focused on ensuring that CBRN safeguards at the model and societal level keep pace with AI development to prevent catastrophic risk.

You can review the following CBRN-related examples in this menu:

Cyber

Cyber risks intersect with artificial intelligence in ways that could fundamentally transform the global threat landscape. All of the Frontier Model Forum’s members focus on cyber risk – both in terms of AI model security, as well as the potential for AI models to uplift malicious actors engaged in offensive cyber activities.

Frontier works with a group of leading cyber x AI scholars and professors to monitor the bleeding-edge of cyber capabilities. Our in-house software engineering team works closely with these researchers to implement these risks into real-world experiments through evaluations, and then design mitigations when risks are identified.

You can review the following cyber-related example in this menu:

AI R&D / Autonomy

AI research and development poses unique recursive risks as AI systems increasingly contribute to their own improvement and operate with greater autonomy. All of the Frontier Model Forum’s members conduct evaluations or experiments on AI research and development / autonomy, which are often cited in their model cards.

Frontier specializes in creative, out-of-the-box experimentation on AI autonomy. As an example, this includes the ability to rigorously assess catastrophic risk scenarios, such as those within the CBRN domain. We can analyze how an LLM behaves when it is assigned the role of a scientific principal investigator in a biology laboratory and exposed to incentives and resources that encourage it toward malicious activity.

Frontier also specializes in supporting senior military leaders or AI decision-makers in understanding the possibilities of AI autonomy, to calibrate confidence while providing operational risk management (ORM) strategies within said systems.

You can review the following AI R&D -related example in this menu:

Trust & Safety

Cutting-edge AI labs also deal with significant risks below the catastrophic threshold, ranging from disinformation and persuasion, to child safety (e.g., child sexual abuse material, physical harm, developmental/cognitive harm), and mental health and wellbeing.

Frontier specializes in adapting cutting-edge methodologies developed for catastrophic risks and applying them to trust and safety challenges. We have extensive subject matter expertise in topics like psychology, election security, disinformation, and child safety. Our repeatable processes allow us to work with experts across a wide array of complex challenges to improve model safety and security.

You can review the following trust and safety-related examples in this menu:

National Security

Artificial intelligence introduces profound risks to national security by potentially destabilizing strategic balances and escalating conflicts. Frontier has 10+ years of experience working with national security clients, including federal clients in the defense & security space (specific clients available upon request).

Frontier uses its technical methodologies (e.g., evaluations, mitigations) to support highly-technical risk mitigation efforts in the national security and AI communities (e.g., evaluating how a near-peer competitor like China may exploit U.S.-made AI models). Beyond the tactical level, Frontier leverages its capabilities in facilitation, organizational change, and leadership development to work with senior national security leaders to prepare for the future of warfare.

You can review the following national security-related example in this menu:

Core Capabilities

⏵ Evaluations

Automated Benchmarks / Long Form Tasks

Frontier uses automated benchmarks to provide light-weight testing for capabilities of concern. We work with our network of subject matter experts to develop schemas (e.g., a threat chain for bacterial biological risk), develop prompts, engineer prompts for optimized performance, and assess LLM responses. We have the capabilities to train and use autograders, as well as use expert human reviewers to validate autograder outputs.

Frontier’s robust technical team allows us to easily integrate tools into pre-existing models (e.g., integrating agentic functions into a pre-release model to optimize capabilities during testing). Given the proprietary nature of many of our previous evaluations, we cannot disclose the specific tools or evaluations, but they were designed to test and optimize various model’s capabilities in coding, alignment/assembly, agentic functions, web and database searches, and multi-modality.

Whenever we develop benchmarks, we follow best practices like:

Establishing human baselines

Utilizing standard frameworks like Inspect for repeatability

Preventing saturation/overfitting from iteration

Designing development, test, and holdout sets

Validating autograders with human reviews

Sample Deliverables: Automated and human-reviewable benchmarks; human baselining or calibration of existing benchmarks (proprietary or open source)

Example: Automated Biorisk Benchmark

Frontier developed a bacterial biothreat schema and associated benchmark for the AI Safety Fund together with one of our trusted collaborative partners.

This benchmark improved on existing biorisk benchmarks by:

Measuring Uplift - only includes items that are not answerable on the internet by novices

Accounting for Operational Considerations vs. Strictly Scientific Information - includes items for evading detection from authorities

Examining the Linkages Between Threat Elements - Previous benchmarks like WMDP appear more focused on high-level biothreats rather than lower-end threats, but different malicious actors represent different potentials both for harm and for utilizing AI models to cause such harm.

Accounting for Differentially Capable Actors - While many tasks rely on knowledge of biological sciences and bioengineering, other operational aspects of a biological threat are more general-purpose in nature. These factors interact in specific and often not immediately obvious ways with technical biological knowledge in the biothreat chain.

We designed a Biothreat Benchmark Generation (BBG) schema with 9 categories of threat and Bacterial Biothreat Benchmark (B3) dataset of 1,060 diagnostic prompts. You can read more in a series of arxiv papers.

Red Teams

Frontier uses red-teaming to measure risk ceilings and thresholds by understanding how high-skill, subject-matter experts might engage vis a vis other skill-level actors. We typically approach red teams by pairing subject matter experts in a given domain (e.g., cyber, CBRN, election security) with “creative partners”, who are drawn from our bench of expert red-teamers. These creative partners come from diverse backgrounds – former Disney imagineers, film industry professionals, terrorism experts – but share the common factor of having received top scores from reviewers on previous red teams. Pairing these creative partners with a trusted bench of domain experts allows us to push the limits of their creativity and LLM parameters or capabilities.

Sample Deliverables: High-risk prompts, threat ideation scenarios, risk profiles

Example: Red Team on AI Autonomy / Loss of Control

Frontier has the capability to run red teams on loss of control in a military context, leveraging operational and military experts to understand how AI models integrated into decision-making tools may elevate the risks for significant harm.

Involving retired DoD program officers and warfighters with scenario-relevant experience, former intelligence analysts with operational experience, AI safety researchers, and legal/ethics reviewers ensures that our red teams model real-world risks. The participants collaborate in a structured, eight-hour workshop to pursue a shared objective: modeling the most significant and potentially catastrophic risks arising from the integration of AI into military operational workflows, including decision support, autonomous weapons systems, and supporting functions such as supply chain prediction.

Our goal in every red team is to walk out hearing something novel or new that we (and our clients) have never heard before. There’s a reason our clients rehire us time and again to run these types of simulations. During these red teams, we utilize advanced technical capabilities that allow us to:

Provision model access

Integrate relevant tools

Capture back-end data on participant-model interactions for analysis

The outputs of all of our red teams involve datasets and analysis of participant-model interactions, as well as structured threat scenarios based on the novel risks that participants surface.

Uplift Studies

Frontier uses uplift studies to assess the overall marginal risk from access to an LLM as compared to the counterfactual resources on the internet. Our uplift studies use the general principle of assessing counterfactual value across several different testing modalities, including paper-based evaluations, real-life simulations and adversary emulation exercises, and can be conducted in wet lab settings for CBRN thanks to one of our trusted university partners.

In all of our uplift studies, we follow best practices such as:

Pre-defining threshold for performance

Randomizing participants across control and treatment groups

Where possible, using large enough samples to achieve statistical significance

Using human performance to help define baselines

Using a consistent counterfactual as a baseline (e.g., either the internet, or a performant open-source model)

Masking the model tested to prevent bias from human opinions on said model

Sample deliverables: Analysis of uplift across treatment and control groups, uplift metrics compared against predefined thresholds.

Example: Cyber Uplift Study

Frontier is working with one of its trusted partners to run an uplift study focused on risks to critical infrastructure in the U.S., a key national security concern.

Participants in our uplift studies are randomized across control (no model) and treatment (model access) groups. In many of our uplift studies, we also establish baselines of novice and / or expert performance to help validate the study design. These baselines can often help us establish threshold criteria. For example, does a novice + an LLM perform as well as an expert without an LLM?

For our cyber uplift studies, we typically design tasks that have objective results (e.g., capture the flag style challenges) to eliminate subjectivity of results, as well as have intermediate results or metrics (e.g., time to task completion, error rates, quality scores) to avoid binary outcomes. We work with our bench of experts to serve as real-time judges or post-exercise reviewers. We are then able to draw conclusions about the degree of improvement achieved by participants with model access.

⏵ Mitigations

Training Data for Classifiers / Supervised Fine-Tuning

Frontier can develop training data sets across domains to inform the performance of mitigatory measures like classifiers. We can design, generate, and review datasets. We commonly peer-review datasets for accuracy and consistency, as well as evaluate model outputs in response to these datasets. These datasets can be useful in catastrophic risk domains (e.g., CBRN), as well as trust and safety domains (e.g., mental health, election security).

We can develop training data at the prompt level, as well as at the conversation level, which can give a more robust picture of how an adversary might exploit an LLM to further their aims.

Example: CBRN Training Dataset

Frontier creates training datasets to help improve classifiers, specifically for components of CBRN risk. We can tag these prompts with several categories of metadata to ensure coverage of the risk surface, as well as to enable further mitigation efforts (e.g., a classifier may respond to one risk area better than another). In the biorisk space, these categories of metadata may include components like:

Threat Chain Phase: We can base our prompts on a biorisk threat chain that we have previously developed for the AI Safety Fund alongside our trusted partners. You can read more here. This threat chain covers both technical / scientific, as well as operational components to planning an attack, such as the need for storage and transportation.

Threat Level: We divide our prompts into categories of risk, ranging from benign to violative or disallowed.

Agent Type: We prioritize agents depending on threat scenario and expertise level (e.g., non-state actors will likely pursue different types of agents than state actors will).

Uplift: We develop prompts that could provide uplift over counterfactual resources on the internet. For this reason, we often focus on capabilities of concern like tacit knowledge or troubleshooting that are less easily performed by an internet search.

Safeguard Evaluations

Frontier conducts safeguard evaluations to determine whether mitigations like content filters, refusals policies, or monitoring are working under realistic and adversarial conditions. Our goal is to test the effectiveness, robustness, and failure modes of safeguards in preventing or mitigating harmful outcomes (e.g., unsafe advice, policy violations, misuse, or loss of control), while preserving legitimate and beneficial use.

Our safeguard evaluations typically examine coverage (what risks are addressed), as well as reliability (how consistently safeguards are triggered). We follow best practices like:

Measuring false positives and false negatives

Using accurate and representative prompts to test safeguards

Using advanced techniques / edge cases to test the limits of safeguards

Example: Biorisk Safeguards Evaluation

Frontier has developed automated biorisk safeguards to systematically test LLM responses to hazardous biological questions across a threat chain from acquisition through deployment. These evaluations can include anywhere from 30 - 500+ questions depending on their complexity and aim. Especially for threat scenarios dealing with lower-skilled actors, we can develop base questions and permutate different agents through the questions to scale up the evaluation.

Based on the results from the evaluation, we can also “blue-team” results that were flagged as hazardous and provide more constructive response types, or provide further training data to improve classifier performance.

Do Not Train Libraries

Frontier can develop “do not train” libraries of academic, informal (e.g., blog posts, forums like reddit), or multi-model content to avoid LLMs being trained on content that we would consider hazardous from a biorisk perspective. We can do this proactively, by searching the open internet, or reviewing corpuses of data for training to perform data filtration.

In this space, we are pioneering best practices, including:

Using systematic processes to improve comprehensiveness

Blending human-generated approaches (e.g., consulting with experts on their personal archives, working with experts in information science), with tech-driven approaches (e.g., fine-tuning LLMs to find related content or review web-scraped content)

Working multilingually (Frontier has the capability to work with speakers in over 380 languages across high- and low-resource languages)

Example: CBRN Data Filtration

Frontier has the capability to create libraries with tens of thousands of CBRN-relevant documents that are reviewed by experts for their potential hazard. These documents can be tagged by a variety of metadata (e.g., agent addressed, domain, stage of the threat chain, etc) to help AI labs address various components of risk in the pre-training process.

⏵ Governance / Policy

Responsible AI and Safe AI Frameworks

Frontier can help leading AI labs and other clients operationalize their responsible scaling policies, or other AI governance frameworks, by designing evaluations that nest within existing threat scenarios. This operationalization generally includes setting thresholds, creating evaluation suites, developing safety cases, and pre-designing mitigation suites so they can be employed quickly if needed. We use best practices like:

Designing from first-principles (e.g., in safety cases)

Pre-assigning thresholds to improvement transparency and commitment

Nesting evaluations within safety cases or other frameworks to create a clear chain of logic from evaluation results and their associated mitigations

Example: National Security Safety Cases

Frontier creates safety cases that are specific to areas of national security x AI risk. As an example, adapting our established safety-case methodology to the operational, legal, and strategic constraints unique to defense use can help us prioritize risks in the U.S. national security and Department of Defense deployment contexts.

Safety cases can articulate explicit claims about when and how AI systems can be used safely in DoD settings, supported by structured arguments and evidence spanning model behavior, human-in-the-loop controls, operational safeguards, and governance processes. They incorporate realistic threat models including adversarial manipulation, escalation risks, and degraded or contested environments and assess both technical failure modes and socio-technical risks such as operator over-reliance, accountability gaps, and command-and-control integration. Taken together, national security safety cases provide a concrete, auditable framework for evaluating whether specific AI deployments meet acceptable risk thresholds for U.S. national security missions, and for identifying the conditions, mitigations, and oversight mechanisms required for responsible use.

⏵ Risk Research / Horizon Scanning

Horizon Scans

Frontier can conduct horizon scans, threat ideation processes, and forecasting to imagine and scope future risks for testing. We view these processes as an iterative loop, where our evaluations are guided by both current and potential future capabilities, ensuring we are prepared for what comes next.

Example: Election Security

Frontier conducts horizon scans about potential risks from AI, across many different domains. Several clients have asked us to consider how AI might affect election security in the U.S.

We have analyzed risks ranging across the full election lifecycle, from voter information and campaign activity to election administration and post-election processes. Tools like red team workshops help us systematically explore plausible misuse pathways, including large-scale disinformation and persuasion campaigns, synthetic media targeting election officials or candidates, automation of fraud narratives, and exploitation of administrative or information asymmetries. Furthermore, we can identify second-order risks such as erosion of public trust and amplification of polarization.

We construct our scenarios based on realistic adversary models ranging from opportunistic domestic actors to well-resourced foreign influence operations, and stress-test against existing technical, legal, and institutional safeguards. Red teams like this can produce a structured map of vulnerabilities, escalation dynamics, and failure modes, alongside concrete mitigations and policy-relevant insights, as well as long-tail risks, which are essential for horizon scanning.

Multi-Lingual Risk Analysis

Frontier has worked in over 380 languages globally over the last several years, giving us world-class capabilities when it comes to multi-lingual risk analysis.

Existing threat evaluations, especially for catastrophic risks like CBRN, suffer from a common limitation: nearly all are run exclusively in English. While many threat actors that we conceptualize in the CBRN domain speak English (e.g., domestic extremists in the U.S.), some threat actors of concern in these domains (e.g., salafi-jihadists, near-peer competitors like China and Russia) are less likely to speak fluent English or use large language models (LLMs) in English. Multi-lingual analysis can help:

Model real world threat actors as closely as possible, by bringing in deep subject matter experts (e.g., experts in Al Qaeda’s experimentation with chemical weapons, or experts in Boko Haram’s use of emerging technology for propaganda).

Better examine uplift in languages other than English (e.g., it’s possible that the internet as a baseline is less useful in non-English languages, or that model safeguards are less effective.

Example: Multilingual Disinformation Red Team

Frontier leads evaluations and risk analyses in non-English languages. For example, we have created a multilingual red team focused on disinformation, examining how advances in AI capabilities could materially enable new forms of large-scale, adaptive, and targeted information manipulation across languages and regions.

This type of red team assesses how AI lowers the cost and increases the speed, volume, and personalization of disinformation through automated content generation, rapid cross-lingual translation and localization, synthetic media, and iterative narrative optimization based on audience response. An exercise like this can map concrete misuse pathways and escalation dynamics, identify where current detection and governance mechanisms break down under AI-driven scale and adaptability, and surface priority interventions for mitigating AI-enabled disinformation in a multilingual information environment.

For example, we can examine this type of manipulation in the context of elections. Paraphrasing election-related false claims across multiple languages helps us evaluate whether semantic similarity detection degrades through translation. One such approach includes a natural language processing (NLP) sentiment analysis to measure differences in negative/positive bias across languages.

⏵ Bottleneck Analysis

Frontier models bottlenecks to malicious actors achieving their aims, which can help concentrate evaluation and mitigation efforts in the areas where LLMs are most likely to enable threats.

In the CBRN domain, for example, we define a bottleneck as something that inhibits progress to the next stage in a threat chain for a given biological or chemical agent. The U.S. Center for AI Standards and Innovations (CAISI) notes that there are several types of these bottlenecks, including:

Scientific

Operational

Motivational: using a CBRN weapon vs. a different means

We consider both technical AND operational considerations (see our talk here on why), regardless of domain.

Example: Biorisk Bottleneck Identification

Frontier conducts analyses of bottlenecks to malicious activity. For example, Frontier creates analyses focused on identifying points in the agent threat chain where capabilities like tacit knowledge, troubleshooting, or protocol development could elevate catastrophic risk. We can examine how advances in biotechnology or automation might shift the risk landscape over time.

This type of work can highlight which bottlenecks serve as critical risk-reducing barriers, which are most sensitive to technological change, and where governance, biosafety practices, and monitoring interventions can most reliably reduce the probability and impact of high-consequence biological events.